*This article was last updated on 20/10/2023

There are numerous components to a robust SEO strategy. One tool that often remains overshadowed is the Robots.txt file. Though it might seem trivial, a well-configured Robots.txt file can enhance the efficiency of search engine crawlers.

On the other hand, a misstep in its configuration can unintentionally hide your entire website from search engines, leading to a significant drop in organic traffic.

What is Robots.txt?

The robots.txt file is a simple text file located in the root directory of a website. Its primary function is to communicate with web crawlers. The file includes instructions on which pages or sections of the site they should or shouldn’t index.

While its role in SEO is undeniable, the Robots.txt file is also critical for websites dealing with bandwidth issues or aiming to protect specific parts of their site from being accessed and indexed.

How Search Engine Crawlers Interact with Robots.txt

Before diving deep into a website, the first port of call for search engine crawlers is the Robots.txt file. This file provides a roadmap, highlighting the areas they can explore and those they should avoid.

Renowned search engines, like Google and Bing, respect the directives mentioned in the Robots.txt file. However, it’s crucial to note that not all web crawlers honor these directives. Malicious bots, for instance, might ignore the file entirely.

How to Configure Your Robots.txt File

A well-configured Robots.txt file can ensure your SEO efforts are streamlined. It can help push your best content forward while keeping the chaff at bay. However, these capabilities are only as effective as your implementation of the file contents.

Keep Your Robots.txt File Clean and Organized

- Comments are Your Friends: Using comments (#) can help you remember the purpose of each directive, making it easier to make updates or changes in the future.

- Group Directives: Group related user-agent directives together. For instance, all rules specific to Googlebot should be grouped under one user-agent directive for clarity.

Use “Disallow” and “Allow” Properly

- Precision is Key: Ensure you’re specific when using the Disallow directive. Avoid broad disallows that might inadvertently block essential pages or sections.

- Leverage the “Allow” Directive: Though not officially part of the standard, search engines like Google recognize the “Allow” directive, which can be helpful to override broader Disallow rules for specific files or paths.

Specify a Crawl-Delay (If You Have Server Load Issues)

- Understand Crawl-Delay: This directive instructs crawlers on how long they should wait between successive requests. It can be helpful for sites that experience server strain from frequent crawling.

- Use with Caution: Not all search engines respect the crawl-delay directive. It’s also essential not to set it too high, as it could hinder your site’s indexing frequency.

Link to the XML Sitemap

- Direct Crawlers to Your Sitemap: Including a pointer to your XML sitemap in Robots.txt can help search engines find and index your content more effectively.

- Sitemap Syntax: Use the “Sitemap:” directive followed by the full URL to your sitemap. This practice aids crawlers in uncovering all the rich content you want to present.

How to Create Your First Robots.txt File

Though its impact is powerful, creating one for the first time is relatively straightforward. Let’s walk through the steps to craft your initial Robots.txt file.

Step 1. Understand Your Website’s Structure

Begin by analyzing the layout of your site. Identify the areas you want to be indexed by search engines and those you wish to remain hidden. This might include private directories, temporary content, or backend data.

Step 2. Choose a Text Editor

Any simple text editor will do. Notepad (Windows), TextEdit (Mac), or advanced code editors like VSCode or Atom are suitable. Avoid word processors like Microsoft Word, as they can add additional formatting that might corrupt the file.

Step 3. Draft the Basic Directives

Start with the most general directive.

User-agent: *

Disallow:

This tells all search engines that they can access all parts of your site.

Step 4. Add Specific Directives

To block specific sections, use the Disallow directive. For instance:

Disallow: /private/

Disallow: /temp/

This prevents search engines from accessing and indexing content within the “private” and “temp” directories.

Step 5. Add Advanced Directives (Optional):

If you’ve grasped the more advanced techniques, such as using wildcards or the “Allow” directive, incorporate them as needed.

Step 6. Include a Sitemap (Recommended)

Directing crawlers to your sitemap can enhance your SEO efforts:

Sitemap: https://www.yourwebsite.com/sitemap.xml

Step 7. Save and Name Your File

Save the file as robots.txt. Ensure it’s in plain text format and has no additional extensions like .txt.txt.

Step 8. Upload to Your Website’s Root Directory

The Robots.txt file should reside in the root directory of your site. For example, if your website is https://www.yourwebsite.com, the Robots.txt file should be accessible at https://www.yourwebsite.com/robots.txt.

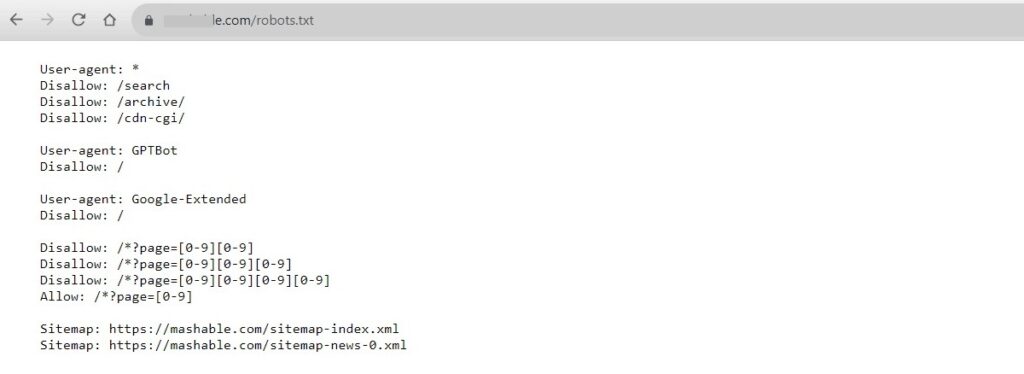

Example of a Well-Configured Robots.txt File

# Robots.txt for example.com

User-agent: * # Applies directives to all web crawlers

Disallow: /private/ # Blocks crawlers from accessing the ‘private’ directory

Disallow: /temp/ # Prevents crawlers from indexing the ‘temp’ directory

# Specific rules for Googlebot

User-agent: Googlebot

Allow: /private/special-article.html # Allows Googlebot to access a specific file in the ‘private’ directory

Disallow: /test/ # Blocks Googlebot from accessing the ‘test’ directory

# Link to the sitemap

Sitemap: https://www.example.com/sitemap.xml # Directs crawlers to the sitemap of the website

# Crawl-delay to manage server load

User-agent: Bingbot

Crawl-delay: 10 # Asks Bingbot to wait 10 seconds between requests

Understanding the Example

Comments: Starting a line with a # denotes a comment. Crawlers do not process it, but it helps humans to understand objectives better. Always use comments to clarify the purpose of specific directives, especially if they seem unclear to someone revisiting the file later.

User-agent Directive: This determines which crawler the following rules apply to. * is a wildcard that applies the rules to all crawlers. You can specify particular crawlers, like Googlebot or Bingbot, to set custom rules for them.

Disallow Directive: Tells crawlers which directories or pages they shouldn’t access or index. In this example, all crawlers are prevented from accessing /private/ and /temp/, while Googlebot has an extra restriction against /test/.

Allow Directive: Used to specify exceptions to the Disallow directives. Though not universally recognized, search engines like Google honor this directive. Googlebot is granted access to a specific page within the otherwise restricted /private/ directory.

Sitemap Directive: A straightforward pointer to the site’s XML sitemap, helping crawlers find and index content more efficiently.

Crawl-delay Directive: Specifies the number of seconds a crawler should wait between requests. This example instructs Bingbot to observe a 10-second delay.

Common Mistakes Made in Robots.txt Configuration

The world of SEO is intricate, and even minor missteps can lead to unintended consequences. Regarding the Robots.txt file, common mistakes can significantly hamper your site’s visibility. That can affect search results or expose sensitive sections to the public eye.

Blocking Important Resources or Entire Site

An unintentional “Disallow: /” can block all search engines from indexing any part of your site. This directive must be used with caution and clear intent.

It’s easy to block more than intended with broad rules. Ensure you’re not inadvertently blocking essential scripts, stylesheets, or images that can impact your site’s appearance or function in search results.

Using the Wrong Syntax

A common error is overlooking spaces in directives, such as “Disallow:/path” instead of “Disallow: /path”. Syntax matters significantly in the Robots.txt file.

The Robots.txt file is case-sensitive. Always ensure that your paths match the actual URLs’ case to avoid accidental blocking or allowing.

Forgetting to Update the File After Site Changes

As your site evolves, specific URLs may change or become obsolete. Regularly review and update your Robots.txt to reflect these changes.

If you’ve temporarily blocked a section for development or other reasons, set reminders to revisit and update the Robots.txt once it’s ready for public viewing.

Ignoring the Robots.txt in the Development Environment

Having the same Robots.txt in development and production environments is a frequent oversight. Ensure your development or staging environment is disallowed to avoid duplicate content issues or accidental indexing of unfinished sections.

Advanced Robots.txt Configuration Methods

While the basic configuration of a Robots.txt file can cater to most website needs, specific scenarios call for a more nuanced approach. Advanced techniques can give you finer-grained control over how search engine crawlers interact with their sites.

Using Wildcard Entries for More Complex Rule Setting

The asterisk (*) serves as a wildcard character in the Robots.txt file, representing any sequence of characters.

Examples of Use:

- Disallow: /*? – This directive blocks crawlers from accessing any URL on your site that contains a question mark (?), commonly used in dynamic URLs.

- Disallow: /images/*.jpg – This would block crawlers from accessing any .jpg images within the /images/ directory.

Leveraging the Noindex Directive

Although it’s a non-standard directive and might not be recognized by all search engines, “Noindex” can be used in Robots.txt to instruct specific engines, like Google, not to index specified pages or directories.

Given its non-standard status, using meta tags within individual pages for the “Noindex” instruction is always safer. However, if you use it in Robots.txt, ensure you know which search engines respect it.

Host Directive for Multiple Versions of a Site

Predominantly recognized by Yandex, the “Host” directive informs the search engine about the preferred domain for indexing when multiple domains serve the same content.

If you have both example.com and www.example.com serving the same content, you might include Host: www.example.com in your Robots.txt to signal your preference.

Clean URL Blocking

If your site uses URL parameters for tracking or sorting, you can use Robots.txt directives to prevent crawlers from accessing URLs with specific parameters. For instance, Disallow: /*?sort= would block any URL containing the “sort” parameter.

Final Thoughts

From understanding its foundational aspects to leveraging advanced techniques, optimizing the Robots.txt file is a testament to a webmaster’s commitment to SEO excellence. Mistakes, while common, can be sidestepped with vigilance and periodic audits.

The rewards of a well-configured Robots.txt go beyond just enhanced visibility. It paves the way for a seamless user experience, bolstered security, and efficient use of server resources.